Nội dung

What is web scraping?

Web scraping, web harvesting, or web data extraction is data scraping used for extracting data from websites. A web scraping software may access the World Wide Web directly using the Hypertext Transfer Protocol or through a web browser.

While web scraping can be done manually by a software user, the term typically refers to automated processes implemented using a bot or web crawler. It is a form of copying, in which specific data is gathered and copied from the web, typically into a central local database or spreadsheet, for later retrieval or analysis.

If you’ve ever copy and pasted information from a website, you’ve performed the same function as any web scraper, only on a microscopic, manual scale.

Web scraping, also known as web data extraction, is the process of retrieving or “scraping” data from a website. Unlike the mundane, mind-numbing process of manually extracting data, web scraping uses intelligent automation to retrieve hundreds, millions, or even billions of data points from the internet’s seemingly endless frontier.

What are proxies?

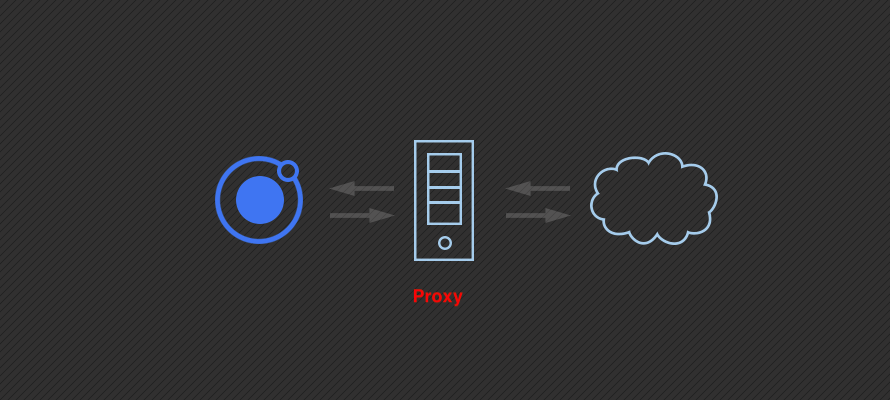

A proxy is a 3rd party server that enables you to route your request through their servers and use their IP address in the process. When using a proxy, the website you are making the request to no longer sees your IP address but the IP address of the proxy, giving you the ability to scrape the web anonymously if you choose.

Why do we need a proxy for web scraping?

There are a number of reasons why proxies are important for web scraping:

- Using a proxy (especially a pool of proxies – more on this later) allows you to crawl a website much more reliably. Significantly reducing the chances that your spider will get banned or blocked.

- Using a proxy enables you to make your request from a specific geographical region or device (mobile IPs for example) which enables you to see the specific content that the website displays for that given location or device. This is extremely valuable when scraping product data from online retailers.

- Using a proxy pool allows you to make a higher volume of requests to a target website without being banned.

- Using a proxy allows you to get around blanket IP bans some websites impose. Example: it is common for websites to block requests from AWS because there is a track record of some malicious actors overloading websites with large volumes of requests using AWS servers.

- Using proxies enables you to make unlimited concurrent sessions to the same or different websites.

What is the best proxy for scraping? (Editor choice)

When it comes to proxies for web scraping, you need to know that the best proxies are the proxies that work on your target website. This is because each website has its own unique anti-spam & anti-scraping system, and what works on Twitter might not work on YouTube. However, we can still reach an agreement on the best as there are some proxy providers that have proxies that are compatible with most complex websites.

For me, the best proxy provider that I choose is Bright Data. They provide different kinds of proxy: Datacenter, Static residential, Residential, Mobile. It is easy and flexible to use.

Conclusion

Proxies are very important in the business of web scraping as they deal with the problem of IP bans and accessing geotargeted web content. However, not all proxies will work for a web scraping project. Depending on your project requirement, budget, and experience, you can get proxies or proxy APIs that will work for your project from the list.

In the next post, we will find what is different between kinds of proxy: Datacenter, Static residential, Residential, Mobile

I am Tuan from Vietnam. I am a programmer Freelancer at web scraping, web automation, Python scripting. I have worked for 7+ years in these fields.