Table of Contents

What is web scraping?

Web scraping, also referred to as web harvesting or web data extraction, is the process of collecting data from websites. This can be accomplished using specialized software that interacts with websites either directly through the Hypertext Transfer Protocol (HTTP) or by simulating a user’s actions via a web browser.

Although it is possible to manually scrape data from websites, the term “web scraping” generally describes automated methods that use bots or web crawlers to gather and store data, typically in a central database or spreadsheet for later use or analysis. Essentially, web scraping automates the same process as copying and pasting information from a website, but on a much larger scale.

In other words, web scraping automates the retrieval of data from websites, collecting massive amounts of information from the internet much more efficiently than manual extraction.

What are proxies?

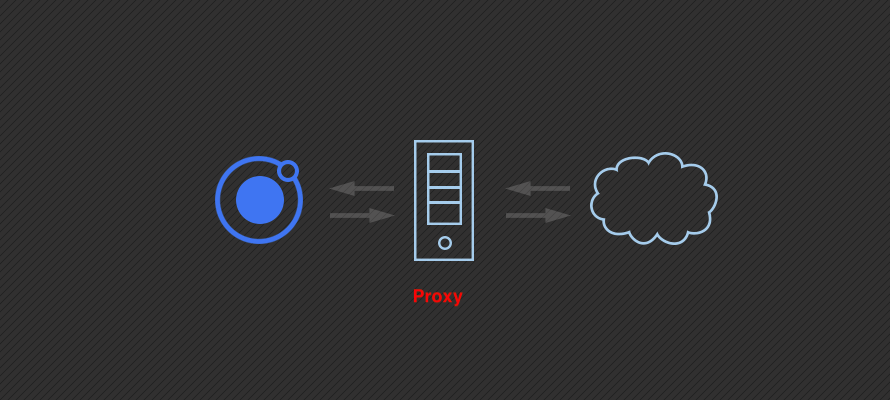

A proxy server acts as an intermediary between your device and the website you’re accessing. When you use a proxy, your request is routed through the proxy server, and the website sees the IP address of the proxy rather than your own. This allows you to browse the web anonymously and, in the context of web scraping, helps to avoid detection and blocking by websites.

Why do we need a proxy for web scraping?

There are several reasons why proxies are essential for web scraping:

- Reliability: Using a proxy (or a pool of proxies) helps reduce the likelihood of your web scraper being banned or blocked by websites.

- Geolocation & Device Targeting: Proxies allow you to send requests from specific geographic regions or devices (e.g., mobile IPs). This is especially useful when scraping data that varies by location or device, such as prices on e-commerce sites.

- Volume of Requests: A proxy pool enables you to make a higher volume of requests to a target website without triggering anti-scraping mechanisms.

- Bypassing IP Bans: Some websites impose blanket bans on certain IP ranges, such as those associated with cloud services like AWS. Proxies allow you to bypass these restrictions.

- Concurrent Sessions: Proxies allow you to make multiple concurrent requests to the same or different websites, speeding up your data collection process.

What is the best proxy for scraping? (Editor choice)

The best proxy for web scraping depends on the specific website you are targeting. Each site has its own anti-scraping measures, so what works for one might not work for another. However, some proxy providers offer services that work with a variety of websites.

In my experience, Bright Data is an excellent choice. They offer different types of proxies, including Datacenter, Static Residential, Residential, and Mobile proxies, and their services are user-friendly and flexible.

Conclusion

PProxies play a crucial role in web scraping by helping to avoid IP bans and access geographically restricted content. However, not all proxies are suitable for every project. Depending on your project’s requirements, budget, and level of experience, you can find proxies or proxy APIs that will work best for your needs.

In the next post, we’ll explore the differences between various types of proxies: Datacenter, Static Residential, Residential, and Mobile.

I am a Freelancer in programming specifically Python Scripting, Web scraping, and Web automation with 10+ years of experience.